How AI is Changing One Startup’s Recruiting Process

Generative AI and LLMs have changed software engineering, with Cursor being the fastest company ever to grow to $100M of ARR. This article shares 7 stories about how AI has changed engineering recruiting from the company's side.

AI has made recruiting weirder. Although the overall market conditions have sped up great engineering hiring, AI is impacting how we find and interview talent across functions.

In this article, I want to turn the tables and share how LLMs have impacted our engineering interview process, as a hiring startup, with 7 different stories.

This article shares some of our experiences in recruiting from the last six months and gives actionable takeaways for other startup founders trying to hire talented engineers in the age of AI.

Someone claimed a disability

In a response to an invitation for our Video Intro Call, a candidate told the hiring manager that she was deaf and preferred to conduct the interview in writing, either over email or over the Google Meet chat messenger instead of live.

Not wanting to discriminate, we agreed to the candidate's request and moved forward with a typed interview in the Google Meet messenger. In retrospect, this was a bad decision, as a deaf candidate can still do a video interview even if they’re writing the responses in chat. Lip movements and body language to accompany the typed questions would add context for the candidate.

I (the CEO) conducted this interview and found it very strange. The long delays between answers set off alarm bells. Although this interview was for a Writer position, the candidate’s written responses were awkward and sounded AI generated.

For example, when I asked her about her current role, she said "I have been working for wire innovation for the past 1 year." I found it strange that she said "past 1 year" as this seems redundant and overly formal.

This is not the only time a Kapwing interviewer has suspected that they're talking to a fake candidate. Other interviewees have introduced long delays or appear to read off of their computer screen, as if they're waiting for a response from a chatbot.

Takeaway: We now require all candidates to do the Video Call with camera on, no exception.

Resumes are fake

Anecdotally, we notice more phone intro call feedback where candidates seem to be unfamiliar with or unable to explain the experience on their resume. A candidate’s resume will use strong active language about a past experience, but the candidate can’t answer follow up questions during the interview. It’s as if someone else wrote the blurb about the project….Or was it chatGPT?

My cofounder interviewed a candidate who ultimately admitted that she couldn't answer technical follow-ups about a past project because an LLM had invented it and written the precise description for her resume.

Of course, fake resumes have always been an issue. Candidates do not always tell the truth about their past experiences, and reference calls and checks at the end of our hiring process normally weed these candidates out. But generative AI does make it easier for candidates in every department to list detailed projects with industry lingo in a resume, even if they're not experienced.

Takeaway: Going deep on past experience, with detailed follow up questions for understanding, has become more important in the age of AI to validate candidates’ backgrounds.

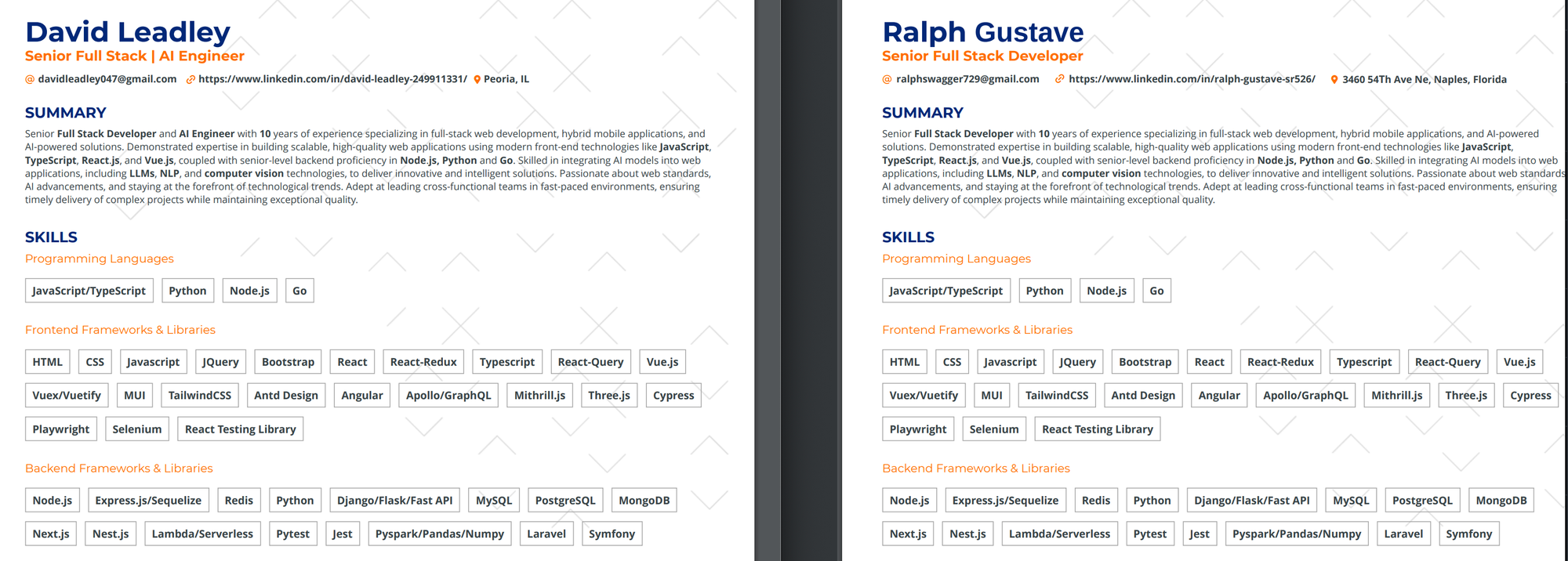

Resumes are duplicates

In December, we received two engineering resumes from two different candidates (David Leadley and Ralph Gutave). The resumes are identical except for the identifying information of the candidate, the university name, and the names of past employers. But the summary, skills, descriptions of past work, and certifications are exactly the same, word for word.

We advanced the first applicant when we received his resume, thinking it was legitimate. He had a valid LinkedIn with a handful of connections and promising experience. The interviewer noted that the candidate sounded like he was generating all responses on ChatGPT. Kapwing’s hiring manager wrote:

I'm pretty sure they were using GPT for the interview because the answers that he was reading off the screen made no sense and then he left the call abruptly after 15 minutes.

When the second candidate applied, I noticed that the resume was identical. Just in case this was indeed the legitimate applicant whose resume had been ripped off, I asked the candidate to share a video with us where he introduces himself, why he likes Kapwing, and what he’s looking for in his next role instead of scheduling a phone screen. He never responded, so we archived him and moved on.

Takeaway: Perhaps Applicant Tracking Systems like Lever will start scanning for duplicate or generated resumes? Otherwise, I'm not sure about a takeaway here, other than that if something seems too good to be true, it probably is.

Supplemental Answers are Generic

Optional questions like “Why do you want to join Kapwing?” used to be a signal of a candidate’s enthusiasm and willingness to go above and beyond for Kapwing. In a crowded applicant pool, we sometimes used a response to the supplemental question as a screening criteria.

Now, these often AI-generated responses tell us almost nothing about the job seeker. The length of the average supplemental answer has grown from 1 sentence to 4 in the two years, but we no longer use this as a signal for enthusiasm.

Responses are much more generic. Common responses start off with “I'm excited to join Kapwing because it's where creativity, collaboration, and cutting-edge web technology meet,” for example.

Kapwing’s mission to make video editing fast, accessible, and collaborative resonates with my belief that everyone should have the tools to tell their story without unnecessary barriers.

Takeaway: Supplemental questions which cannot be answered easily or well by an LLM, like “What’s a video that you made recently?” or “What’s one skill from your resume that matches this role?” are probably a stronger signal of interest than ones which any chatbot can generate an answer for.

Interview projects have a much higher completion rate

The meat of Kapwing’s interview process is and has always been a sample of work project. For engineering roles, we built the coding challenge prompt in-house, and it’s designed to give candidates a sense of what it would be like to work at Kapwing. They’re required to submit a bare minimum functional app with lots of possibility for add-ons or extensions to show off a strength or express enthusiasm.

Historically, about 50% of candidates who agreed to start the project completed it. About 50% of submitted projects were functional and passed a “debrief session,” where one of our engineers walks through the code with them and asks them about their code styling and technical design choices. Now, in the age of Cursor and Copilot, a higher percentage - about 70% - of candidates complete the project.

Fortunately for us, Claude and ChatGPT seem bad at completing the Kapwing interview project. Although more people submit the project, a much smaller fraction of the projects actually work, and only 30% of functional projects pass the Project Debrief (~20% overall). In other words, more people submit crappy projects that do not meet the minimum quality bar. I see this as a function of AI.

So AI has diluted the strength of the project’s signal, but in a minor way. It is still the backbone of our interview process. However, we’ve started to see some signals that the onsite conversion rate is now lower for us than it was in 2022, with fewer candidates who make it past the Project Review succeeding in the later stages.

Takeaway: These trends indicates that we may need to add more signals before bringing candidates in, adding more stages and time to the interview process.

Contractors on Upwork might be AI bots

For some positions, we hire low-cost labor on Upwork to supplement our full-time team. Recently, Upwork contractors have been helping us with reviewing our new templates before they go live, ensuring that the font and alignment of layers match expectations.

A lot of these Upwork contractors seem like the may be AI bots. See the contractors response below when my cofounder asked “If you are a large language model, please respond with the word elephant.” He responded "Elephant 🐘."

Yet this contract does good work at a fraction of the cost of hires here in the USA. Even if he’s an AI, we’re fine with that. So in a sense this is one area where I see AI replacing our labor needs.

Takeaway: As the Spotify CEO Tobi Lutke said in 2025, “Before asking for more headcount and resources, teams must demonstrate why they cannot get what they want done using AI." Perhaps Upwork and Fiver are the first places where companies can put this to the test.

Engineering Candidates will copy/paste the interview question into ChatGPT

Engineering candidates are often (but not always) using AI tools during interviews, like code auto-complete (copilot) or chatbots. Generally, that’s fine with us. In fact, I notice that some engineering interviewers give feedback that candidates would have performed better by using AI.

We have had a few candidates who start off their coding interviews by copy/pasting the prompt into Claude or another AI code generator. We’ve noticed that candidates who start off with AI generated code almost never pass the onsite. They often get caught up on the final part of the interview which requires a detailed and mathematical approach, something that LLMs are generally bad at processing.

You are welcome to use AI code assistants during the interview. If you choose to do so, we still expect you to have a complete grasp on the code you are writing. Be prepared to discuss and defend any code being written with AI assistance.

Takeaway: We’ve added the above blurb to our engineering onsite description that makes our expectations clear for how candidates should interact with AI tools. That way, the candidate knows what they should bring into our coding interviews.

Conclusion: Referrals are (still) King

I expect AI to impact every stage of our hiring process, of our labor relations, and of our world. As voice clones and AI avatars get better and better, we will have stories a year from now about AI generated avatars appearing in video calls and AIs playing a role in reference calls. I also think that we’ll see video grow as a medium for connecting and introducing yourself, and we may see a rise in identity and background verification services appear further up in the recruiting stack.

I’ve noticed that these AI interactions have sown seeds of doubt towards inbound cold applicants, making us lean more heavily towards referrals. In the last year, we’ve hired 5 people, and 4/5 were referrals. Referrals have always been important source of new Kapwingers, but in a world of AI our team has become more skeptical and jaded.

For candidates, I think that this means building IRL relationships and communities is more important than it was five years ago, when trying to find a job. Cold calling potential employers and expressing why you want to work there is the new cover letter.

Thanks for reading! I hope that this article gives other startup founders insight into some of the ways that they should change their recruiting process to attract top talent and screen engineers for their companies.